Sceneflows

IRIS Wiki - Computational Models - Sceneflows

Background

Description

Central concept of the modeling approach with scenes and sceneflows is the separation of dialogue content and structure. Multi-modal dialogue content is specified in a set of scenes that are organized in a scenescript. The narrative structure of an interactive performance and the interactive behavior of the virtual characters is controlled by a sceneflow, which is a state chart variant specifying the logic according to which scenes are played back and commands are executed. Sceneflows have concepts for the hierarchical refinement and the parallel decomposition of the model as well as an exhaustive runtime history and multiple interaction policies. Thus, sceneflows adopt and extend concepts that can be found in similar state chart variants.

Creating Multimodal Dialogue Content

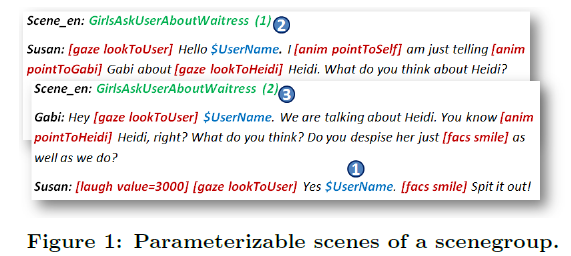

As shown in Fig. 1, a scene resembles the part of a movie script consisting of the virtual characters' utterances containing stage directions for controlling gestures, postures, gaze and facial expressions as well as control commands for arbitrary actions realizable by the respective character animation engine or by other external modules. Scenescript content can be created both manually by an author and automatically by external generation modules.

The possibility to parameterize scenes may be exploited to create scenes in a hybrid way between fixed authored scene content and variable content (Fig. 1(1)), such as retrieved information from user interactions, sensor input or generated content from knowledge bases. A scenescript may provide a number of variations for each scene that are subsumed in a scenegroup, consisting of the scenes sharing the same name or signature (Fig. 1(2), 1(3)). Different blacklisting strategies can be used to choose one of the scenes from a scenegroup for execution.

Modeling Dialogue Logic and Context

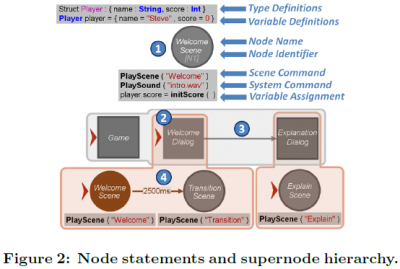

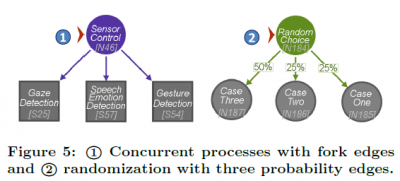

A sceneflow is a hierarchical and concurrent statechart that consists of different types of nodes and edges. A scenenode can be linked to one or more scenegroup playback or system commands and can be annotated with statements and expressions from a simple scripting language, such as type- and variable definitions as well as variable assignments and function calls to predefined functions of the underlying implementation language (Fig. 2(1)). A supernode extends the functionality of scenenodes by creating a hierarchical structure. A supernode may contain scenenodes and supernodes that constitute its subautomata. One of these subnodes has to be declared the startnode of that supernode (Fig. 2(2)). The supernode hierarchy can be used for type- and variable scoping. Type definitions and variable definitions are inherited to all subnodes of a supernode. The supernode hierarchy and the variable scoping mechanism imply a hierarchy of local contexts that can be used for context-sensitive reaction to user interactions, external events or the change of environmental conditions. Different branching strategies within the sceneflow, such as logical and temporal conditions or randomization, as well as different interaction policies, can be modeled by connecting nodes with different types of edges. An epsilon edge represents an unconditional transition (Fig. 2(3)). They are used for the specification of the order in which computation steps are performed and scenes are played back. A timeout edge represents a timed or scheduled transition and is labeled with a timeout value (Fig. 2(4)). Timeout edges are used to regulate the temporal flow of a sceneflow's execution and to schedule the playback of scenes and computation steps. A probabilistic edge represents a transition that is taken with a certain probability and is labeled with a probability value (Fig. 5(2)). Probabilistic edges are used to create some degree of randomness and desired non-determinism during the execution of a sceneflow. A conditional edge represents a conditional transition and is labeled with a conditional expression (Fig. 3). Conditional edges are used to create a branching structure in the sceneflow which describes different reactions to changes of environmental conditions, external events or user interactions.

Interaction Handling Policies

User interactions as well as other internally or externally triggered events within the application environment can rise at any time during the execution of a model. Some of these events need to be processed as fast as possible to assert certain realtime requirements. There may, for example, be the need to contemporarily interrupt a currently running dialogue during a scene playback in order to give the user the impression of presence or impact. However, there can also exist events that may be processed at some later point in time allowing currently executed scenes or commands to be regularly terminated before reacting to the event.

These two different interaction paradigms imply two different interaction handling policies that find their syntactical realization in two different types of interruptibility or inheritance of conditional edges:

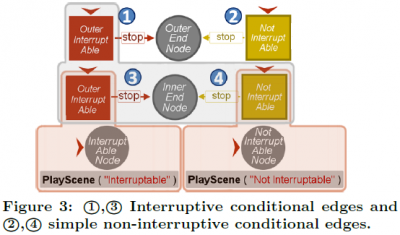

- Interruptive conditional edges (Fig. 3(1), 3(3)) are inherited with an interruptive policy and are used for handling of events and user interactions requiring a fast reaction. Whenever an interruptive conditional edge of a node can be taken, this node and all descendant nodes may not take any other edges or execute any further command. These semantics imply, that interruptive edges that are closer to the root have priority over interruptive edges farther from the root.

- Non-interruptive conditional edges (Fig. 3(2), 3(4)) are inherited with a non-interruptive policy, which means that a non-interruptive conditional edge of a certain node or supernode can be taken after the execution of the node's program and after all descendant nodes have terminated. This policy is implicitly giving higher priority to any conditional edge of nodes that are farther from the root.

Fig. 3 shows a supernode hierarchy with different conditional edges. If the condition stop becomes true during the execution of the two innermost scene playback commands, then the scene within the supernodes with the non-interruptive conditions (Fig. 3(2), 3(4) ) will be executed to its end. However, the scene within the supernodes with the interruptive conditions (Fig. 3(1), 3(3)) will be interrupted as fast as possible. In the non-interruptive case the execution of the scene ow continues with the inner end node (Fig. 3(4)) before the outer end node is executed (Fig. 3(2)). In the interruptive case the execution of the sceneflow immediately continues with the outer end node (Fig. 3(1)) because the outer interruptive edge has priority over the inner interruptive edge (Fig. 3(3)).

Modeling Multihreaded Dialogue

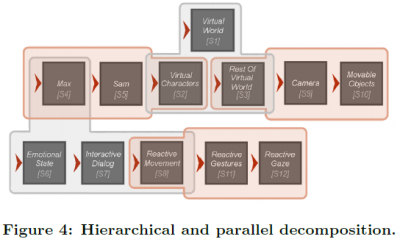

Sceneflows exploit the modeling principles of modularity and compositionality in the sense of a hierarchical and parallel decomposition. Multiple virtual characters and their behavior, as well as multiple control processes for event detection or interaction management, can be modeled as concurrent processes in parallel automata. For this purpose, sceneflows allow two syntactical instruments for the creation of concurrent processes. By defining multiple startnodes for a supernode (Fig. 4), each subautomaton which consists of all nodes reachable by a startnode, is executed by a separate process. By defining fork edges (Fig. 5(1)), an author can create multiple concurrent processes without the need for changing the level of the node hierarchy.

Following this modular approach, an author is able to separate the task of modeling the overall behavior of a virtual character into multiple tasks of modeling individual behavioral aspects, functions and modalities. Behavioral aspects can be modified in isolation without knowing details of the other aspects. In addition, previously modeled behavioral patterns can easily be reused and adopted. Furthermore, pre-modeled automata that are controlling the communication with external devices or interfaces can be added as plug-in modules that are executed in a parallel process. Individual behavioral functions and modalities that contribute to the behavior of a virtual character are usually not completely independent, but have to be synchronized with each other. For example, speech is usually highly synchronized with non-verbal behavioral modalities such as gestures and body postures. When modeling individual behavioral functions and modalities in separate parallel automata, the processes that concurrently execute these automata have to be synchronized by the author in order to coordinate all behavioral aspects. This communication is realized by a shared memory model which allows an asynchronous non-blocking synchronization of concurrent processes.

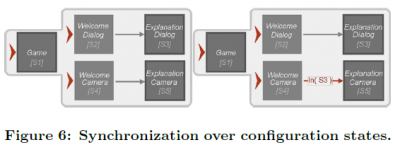

Thereby, sceneflows enfold two different syntactic features for the synchronization of concurrent processes. First, they allow the synchronization over common shared variables defined in some supernode. The interleaving semantics of sceneflows prescribe a mutually exclusive access to those variables to avoid inconsistencies. Second, they enfold a state query condition (Fig. 6) which represents a more intuitive mechanism for process synchronization. This condition allows to request weather a certain parallel automaton's state is currently executed by the sceneflow interpreter.

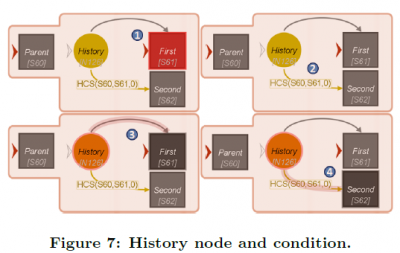

Consistent Resumption of Dialogue

The concept of an exhaustive runtime history facilitates modeling reopening strategies and recapitulation phases of dialogues by falling back on automatically gathered information on past states of an interaction. During the execution of a sceneflow, the system automatically maintains a history memory to record the runtimes of nodes, the values of local variables, executed system commands and scenes that were played back. It additionally records the last executed substates of a supernode at the time of its termination or interruption. The automatical maintainance of this history memory releases the author of the manual collection of such runtime data, thus e�ciently reducing the modeling effort while increasing the clarity of the model and providing the author with information about previous interactions and states of execution. The scripting language of sceneflows provides a variety of built-in history expressions and conditions to request the information deposited in the history memory or to delete it. The history concept is syntactically represented in form of a special history node which is an implicit child node of each supernode. When re-executing a supernode, the supernode starts at the history node instead of its default startnodes. Thus, the history node serves as a starting point for the author to model reopening strategies or recapitulation phases. Fig. 7 shows a simple exemplary use of a supernode's history node and a history condition. At the first execution of the supernode Parent, the supernode starts at its startnode First (Fig. 7(1)). If the supernode Parent is interrupted or terminated at some time and reexecuted afterwards, it starts at the history node History. The history memory is requested (Fig. 7(2)) to find out if the supernode had been interrupted or terminated in the node First or the node Second. As the snaphot of the visualized execution shows, depending on the result, the either the node First (Fig. 7(3)) is executed or the node Second (Fig. 7(4)) is started over the history node.

Examples

Beside the use in many games and research projects Sceneflows are used in the following interactive storytelling applications:

References

- Gregor Mehlmann, Birgit Endrass and Elisabeth André, Modeling and Interpretation of Multithreaded and Multimodal Dialogue, In Proceedings of the 13th International Conference on Multimodal Interaction (ICMI 2011).

- Patrick Gebhard, Gregor Mehlmann and Michael Kipp, Visual SceneMaker: A Tool for Authoring Interactive Virtual Characters, In Special Issue of the Journal on Multimodal User Interfaces: Interacting with ECAs (JMUI 2011).

- Gregor Mehlmann, Patrick Gebhard, Birgit Endrass and Elisabeth André, SceneMaker: Visual Authoring of Dialogue Processes, On 7th Workshop on Knowledge and Reasoning in Practical Dialogue Systems (IJCAI 2011).

Contact

For more information see also http://hcm-lab.de/projects/iris/index.php/SceneMaker or contact one of these persons: